Decentralized GPU Cloud: The 2025 Buyer’s Guide for AI & Rendering

A decentralized GPU cloud is a distributed marketplace where independent providers data centers, enterprises, and individuals rent out GPU capacity over a network instead of a single hyperscaler. Engineering teams use it alongside or instead of AWS, GCP, or Azure to unlock cheaper, more flexible GPU capacity for AI, gaming, and 3D rendering workloads, especially in GPU-constrained regions.

Introduction

Decentralized GPU clouds sit at the intersection of web3 infrastructure, cloud computing, and the AI boom. Instead of leasing GPUs from a single provider like AWS, GCP, or Azure, you tap into a distributed GPU compute network that aggregates capacity from many independent nodes around the world.

This model is gaining attention as GPU demand explodes: analysts expect worldwide AI spending to reach roughly $1.5 trillion in 2025 and continue rising as hyperscalers pour hundreds of billions into AI-capable data centers. At the same time, per-hour cloud GPU prices for A100/H100 instances routinely land in the $3–$4+ per hour range on major clouds for on-demand usage.

In this guide, written for AI leads, CTOs, and infra/FinOps teams in the US, UK, Germany and wider Europe, we’ll break down:

What a decentralized GPU cloud actually is

How costs and performance compare to AWS/GCP/Azure

Where decentralized GPU clouds fit best for LLM training, inference, and rendering

Compliance, data residency, and DePIN token considerations

A step-by-step path to deploy a real PyTorch or TensorFlow workload

How to use decentralized GPUs as a “cost valve” in a hybrid, multi-cloud stack

Where relevant, Mak It Solutions can help you design and implement this stack end-to-end from AI workloads to cloud orchestration and analytics.

What Is a Decentralized GPU Cloud?

A decentralized GPU cloud is a distributed network of independent GPU providers (data centers, enterprises, and individuals) connected through a marketplace layer. Instead of renting GPUs from a single hyperscaler like AWS or GCP, you tap into pooled GPUs across many locations, matched to your AI or rendering workloads with automated scheduling, pricing, and verification.

In other words, it’s web3 GPU cloud infrastructure: a coordination layer that turns fragmented GPU hardware into something that feels like a cloud, but with more open pricing and supply.

From Centralized Clouds to Decentralized GPU Networks

A centralized cloud GPU (AWS, GCP, Azure) is operated, priced, and fully controlled by a single vendor. You get mature tooling and enterprise support, but you’re bound to their SKUs, regions, and capacity constraints.

A decentralized GPU cloud.

Aggregates GPUs from many owners (data centers, enterprises, prosumers)

Uses a protocol or marketplace to match jobs to nodes

Often uses tokens or on-chain payments for billing and incentives

Exposes APIs or SDKs for submitting jobs (AI training, inference, rendering)

Projects like io.net, Akash Network, Aethir, Nosana, Fluence, OctaSpace, Render Network and others all implement variations of this idea for AI and rendering workloads.

The result feels like a distributed GPU compute network: you request compute, the network finds suitable nodes, and the protocol coordinates execution and rewards.

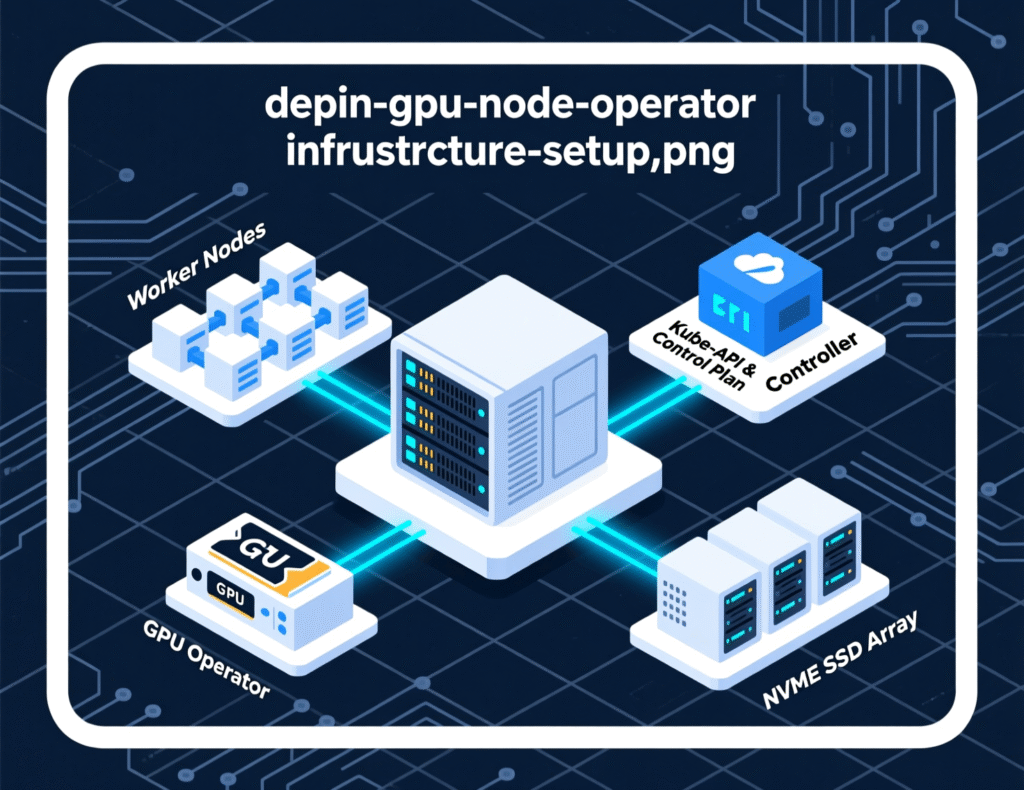

Nodes, Marketplace, Tokens, and Orchestration Layers

Most decentralized GPU clouds share four core building blocks:

GPU Nodes

Individual machines or clusters with NVIDIA (A100/H100/H200, 4090, etc.) or AMD GPUs.

Run an agent that registers resources, receives jobs, and reports status.

Marketplace / Order Book

Bids/asks for GPU capacity (price, GPU model, region, reliability).

Can support spot-like auctions, fixed rates, or long-running leases.

Tokens / Payments

Some platforms use crypto tokens for payments and incentives (e.g., Aethir, Akash, Render)

Others rely on fiat or stablecoins with Web2-style billing.

Orchestration & Verification Layer

Schedules workloads onto nodes (Kubernetes-like semantics in some cases).

Implements proof-of-compute or result verification to ensure nodes actually ran your job.

Handles retries, replication, and sometimes confidential computing or encryption.

On top of this, providers expose Docker, Kubernetes, or language-specific SDKs so your engineers can treat it like “just another cloud,” abstracting most blockchain details away.

How It Differs from AWS, GCP, and Azure GPU Clouds

Compared with hyperscalers, a decentralized GPU cloud

Sourcing

Hardware can be anywhere Tier-3 data centers in Frankfurt, a gaming PC in Manchester, or an enterprise cluster in New York.

Pricing

More market-driven; some networks advertise up to 70% lower GPU prices vs AWS for certain SKUs.

Control & Compliance

Hyperscalers provide strong, standardized compliance (SOC 2, ISO 27001) out of the box; decentralized networks vary and require more due diligence.

Ecosystem Integration

AWS/GCP/Azure tie deeply into storage, networking, IAM, and DevOps tools; decentralized networks often focus just on compute and basic storage.

So the question for buyers is less “AWS or decentralized?” and more “Where do decentralized GPU clouds complement AWS/GCP/Azure for the best cost-to-risk profile?”

Why Teams Consider Decentralized GPU Clouds vs AWS/GCP/Azure

Teams typically explore decentralized GPU clouds when centralized GPU instances are too expensive, sold out, or limited in specific regions. The tradeoff: potentially lower cost and more flexible capacity in exchange for more attention to provider selection, SLAs, and compliance.

In practice, most organizations don’t rip out AWS or Azure; they use decentralized GPUs as a pressure-relief valve for cost, capacity, and specific workloads.

Token-Based Pricing vs On-Demand Cloud GPU Rates

The key economic driver is simple: GPU hour price spread.

On major clouds, on-demand A100 40GB instances often land around $3.7–$4.1 per hour; reserved or spot capacity can be cheaper but comes with commitments or volatility.

Decentralized GPU networks (Akash, Fluence, etc.) list A100/H100/H200 and 4090 GPUs in the $0.36–$3.6/hr range depending on model, region, and SLA.

That price delta is amplified when.

You’re running LLM inference at scale (tens of thousands of GPU hours/month).

You need bursty capacity for short-lived training runs.

You don’t want long-term commitments like 1–3 year reservations.

Token-based pricing adds complexity.

Token prices can be volatile (MiCA and national securities/tax rules may apply in the EU).

However, some platforms denominate in USD or stablecoins and settle on-chain behind the scenes, so engineering teams can treat it as normal billing.

For FinOps teams, the mental model is: treat decentralized GPU clouds like a cheaper spot/alternate provider, then benchmark total cost of ownership (compute + egress + storage + operational overhead).

Performance & Latency: When Distributed GPUs Match or Beat Centralized Clouds

Decentralized GPU clouds can match or beat centralized clouds on raw performance when:

You get access to newer GPUs (H100, H200, 4090) not yet widely available in your chosen AWS/Azure region.

The network routes you to nearby nodes (e.g., Frankfurt for Berlin customers, New York for East Coast users).

You can co-locate data and compute to minimize cross-region hops.

Latency considerations.

For offline training (multi-hour jobs), you mainly care about throughput and job completion time.

For online inference (sub-100 ms targets), you need either:

Nodes near your users (e.g., London or Dublin for UK fintech AI), or

An edge/hybrid setup where only heavy batch inference runs remotely.

If low latency into a specific VPC is critical, AWS/GCP/Azure still have an edge. But for batch-style workloads, a well-chosen decentralized GPU cloud can be indistinguishable in performance while far cheaper.

Reliability, SLAs, and Uptime in a Decentralized GPU Network

Centralized clouds give you familiar SLA numbers (e.g., 99.9% uptime for particular managed services). Decentralized GPU networks handle reliability differently:

Redundancy

Jobs can be replicated across multiple nodes; failed nodes are penalized.

Staking / Slashing

Node operators may have tokens at stake, which are slashed for misbehavior.

Reputation Scores

Nodes accumulate scores based on job success, latency, and customer feedback.

For mission-critical workloads in finance, healthcare, or public sector, you’ll typically:

Use decentralized GPUs for non-critical or burst workloads, while

Keeping core systems of record and highly regulated processing in a more traditional cloud with signed BAAs, DPAs, or BaFin-aligned contracts.

In short: decentralized GPU clouds are reliable enough for many AI and rendering workloads, but enterprise buyers must layer their own SRE practices monitoring, health checks, retries, blue-green deployments on top. Mak It Solutions often helps clients design those guardrails.

Decentralized GPU Cloud for AI Workloads

AI teams get the most value from decentralized GPU clouds when running bursty or large-scale jobs like LLM fine-tuning, long-running training, and high-throughput inference where cheaper or more abundant GPUs outweigh the need for deep integration with a single hyperscaler.

Think of this as on-demand GPU compute for inference and training that you can dial up or down alongside your main cloud provider.

Best-Fit AI Workloads: Training, Fine-Tuning, and Inference at Scale

Best-fit workloads typically include.

LLM fine-tuning and pre-training

Multi-GPU, long-running jobs where a 20–50% price difference over thousands of GPU hours becomes very material.

High-throughput inference

Chatbots, recommendation engines, fraud models, and background agents that can tolerate 50–150 ms of latency if priced aggressively.

Experimentation bursts

Hackathons, research sprints, or evaluation campaigns where you temporarily need 10x-more GPUs than usual.

For example.

A San Francisco AI startup might run its production inference on GCP but switch fine-tuning jobs to a decentralized GPU marketplace when GCP A100/H100 capacity gets expensive.

A London healthtech using anonymized, non-PHI test data could run large-scale model evaluations on a decentralized network while keeping live NHS-adjacent workloads in a UK-hosted, HIPAA/GDPR-aligned cloud.

A Berlin SaaS vendor might send overnight training jobs to nodes in Frankfurt or Amsterdam to stay close to EU regions.

Decentralized GPU Cloud for AI vs AWS: Cost & Scalability for LLM Inference

For LLM inference, the cost model is straightforward.

On AWS/GCP/Azure, you pay an hourly GPU price plus storage and network; scaling often means provisioning more GPU instances or adopting managed services.

On decentralized networks, you bid for GPUs and can scale horizontally to many nodes in parallel.

Because decentralized platforms often undercut hyperscalers on per-hour GPU prices, LLM inference at scale is a prime candidate for a “decentralized GPU cloud vs AWS cost comparison for LLM inference” POC. Over a year, even a modest 20–30% discount can add up to six or seven figures for high-volume inference.

The trade-offs to watch.

Observability: logs/metrics may be less integrated into your main APM stack.

Networking: egress into your VPC or data warehouse must be designed carefully.

Vendor maturity: support may rely more on communities and Discord/Slack than enterprise tickets.

Example Providers for AI in US/UK/EU (Aethir, Akash, io.net, Fluence, OctaSpace, DeNube, Nosana)

Without endorsing specific vendors, here’s how the landscape roughly clusters.

io.net

Focused on AI workloads, aggregating tens of thousands of GPUs with claims of up to 70% cheaper than AWS for some use cases.

Akash Network

A decentralized compute marketplace listing H100, H200, and 4090 GPUs across multiple regions, often at significantly lower hourly rates than hyperscalers.

Aethir

Positions itself as a DePIN-powered, decentralized GPU cloud aimed at AI, gaming, and cloud streaming workloads globally.

Fluence, OctaSpace, DeNube, Nosana

Alternative DePIN-style GPU rental marketplaces, with mixes of Tier-3/4 data centers and independent providers.

For AI startups in the USA searching “best decentralized GPU cloud for AI startups in USA”, the practical approach is to shortlist 2–3 providers, run 1–2 inference microservices on each, then compare cost, latency, and support against a baseline AWS/GCP deployment.

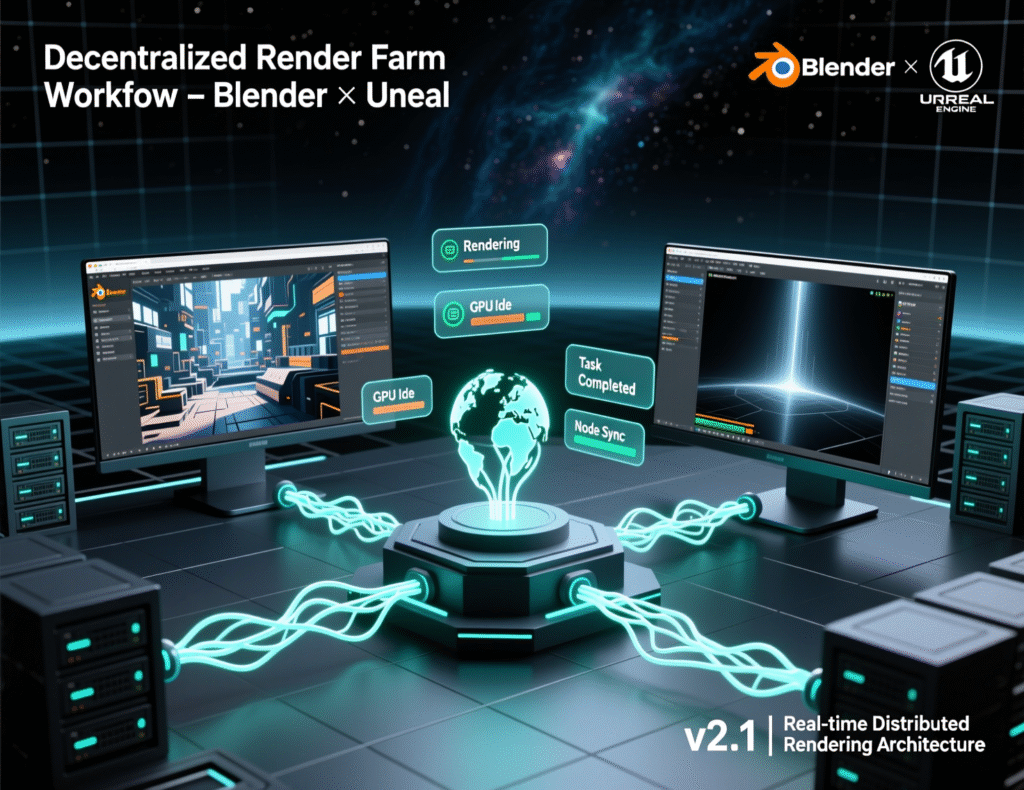

Decentralized GPU Rendering & Render Farms

A decentralized GPU render farm lets 3D/VFX studios tap into a global pool of GPUs, often at lower cost than fixed render farms, while paying per rendered frame or job instead of maintaining dedicated infrastructure.

Here the value proposition is particularly strong for VFX, gaming, architecture visualization, and digital twins.

From Traditional Render Farms to Web3 Render Networks (Render Network & Competitors)

Traditional render farms.

Require upfront contracts or credits.

Are limited to a few physical data centers.

Often price based on CPU/GPU time plus storage and egress.

Render Network (RNDR) and similar web3 render networks flip this by matching artists and studios with GPU providers worldwide. The Render Network describes itself as a decentralized GPU rendering platform that harnesses idle GPU power for next-generation media production, including Cinema 4D and Octane workflows.

This approach fits studios whose workloads are.

Spiky (project-based)

Highly parallel (frame/shot-based rendering)

Geographically distributed (teams in London, Berlin, Austin collaborating)

Decentralized GPU Rendering for Blender, Octane, Cinema 4D & Unreal

Modern decentralized render networks increasingly support:

Blender (Cycles, Eevee)

OctaneRender

Cinema 4D

Unreal Engine and related pipelines

Typically, artists.

Export scenes/projects using a plugin or command-line tool.

Submit jobs to the decentralized render marketplace.

Receive rendered frames, which they composite locally or in the cloud.

For a London-based VFX shop working on a Netflix-style series, a decentralized render network can offload heavy overnight scenes to GPUs in Dublin or Amsterdam, while keeping dailies and previews local. For an architectural studio in Munich, a dezentralisierte Renderfarm mit EU-Datenresidenz für Architekturvisualisierung might be enough to satisfy client requirements that projects stay within EU data centers.

Pricing & Performance: Decentralized Render Farm vs Centralized Cloud Rendering in US/UK/EU

On centralized clouds, GPU rendering often piggybacks on the same A100/H100/H200 instances used for AI workloads. This can be expensive if you pay on-demand for long render queues. On decentralized render networks, you typically:

Pay per frame, per job, or per GPU hour, in tokens or fiat.

Benefit from a wide pool of GPUs that can parallelize large projects.

Can choose between “fast but more expensive” and “cheap but slower” node classes.

For studios in New York, London, or Berlin, a benchmarking approach works well:

Render a controlled set of frames on your existing cloud or physical farm.

Render the same frames on Render Network or similar web3 render providers.

Compare

Time-to-complete

Cost per frame

Consistency and visual parity of output.

For many VFX/gaming teams, decentralized rendering becomes a secondary render pipeline used when internal GPUs or fixed render contracts are saturated.

GPU DePIN & Monetizing Idle GPUs

DePIN (decentralized physical infrastructure networks) for GPUs enable individuals and data centers to contribute idle GPUs to a shared network and earn rewards (tokens or revenue share) when their hardware is used for AI, gaming, or rendering workloads.

For infra-minded teams, this opens a second angle: not just consuming GPUs, but also supplying them.

What a DePIN GPU Network Is and How Rewards Work

A GPU DePIN network connects

Providers: people or companies with idle GPUs.

Consumers: AI and rendering teams buying compute.

Protocol: handles matching, metering, payments, and slashing.

Rewards mechanisms include

Job-based payouts: get paid per GPU-hour or per completed render.

Token emissions: networks may subsidize early providers by distributing governance or reward tokens.

Reputation bonuses: higher-quality nodes earning more jobs or better rates.

From a compliance and tax perspective, EU providers should watch MiCA and domestic tax rules to understand how tokens are treated (e.g., capital gains vs income)

Monetizing Idle GPUs at Home or in Data Centers in the US, UK, and EU

If you run internal GPU clusters (e.g., for a New York fintech, an NHS-adjacent AI lab in Manchester, or a Frankfurt-based bank), you may have idle capacity on evenings or weekends.

DePIN networks let you sell that excess capacity when.

You can isolate external jobs from internal data (e.g., via separate hosts or strong hypervisor isolation).

Your legal and InfoSec teams are comfortable with the risk profile.

You account for local energy prices, wear-and-tear, and tax.

Home GPU providers (e.g., gamers with 4090s in Austin or Berlin) might join networks like Render or IO.net to earn additional income, but enterprises typically focus on monetizing data center grade GPUs where they already have cooling and power optimized.

Running a Decentralized GPU Node: Hardware, Network, and Compliance Basics

At a high level, running a node requires.

Hardware

Recent NVIDIA GPUs (A100/H100/4090+), sufficient VRAM, reliable storage.

Network

Stable broadband, low latency to target regions, proper firewall rules.

Security & Compliance

Hardened OS, patching, secrets management.

For EU/UK financial or healthcare workloads, alignment with SOC 2 / ISO 27001-style controls and cloud outsourcing guidance (e.g., BaFin).

For corporates, Mak It Solutions can help design this as part of a hybrid strategy: you use decentralized networks to sell idle capacity while retaining strict segmentation from internal production systems.

Compliance, Data Residency & Risk for US, UK, Germany & EU

For regulated industries in the US, UK, and EU, the key question is whether a decentralized GPU cloud can guarantee that personal or financial data stays in specific jurisdictions (EU-only, UK-only, US-only) and is processed only by nodes that meet frameworks like GDPR/DSGVO, HIPAA, PCI DSS, SOC 2, or BaFin-aligned controls.

This is where centralized clouds still have an advantage but decentralized GPU providers are catching up.

GDPR/DSGVO, UK-GDPR & Data Residency for AI and Rendering Workloads

In the EU and Germany, the GDPR/DSGVO requires that personal data processing has a lawful basis, appropriate safeguards, and often data residency within the EEA (or adequate jurisdictions).

Key implications.

For anything involving personal data, you must know where nodes are located and under which law they operate.

Some decentralized networks now allow you to restrict jobs to EU-only or Germany-only nodes, enabling a “dsgvo-konformer dezentraler GPU-Cloud-Anbieter in Deutschland” scenario if contracts and technical controls align.

In the UK, UK-GDPR and the Data Protection Act impose similar obligations; regulators and NHS-adjacent guidance emphasize transparency and governance for AI and data use.

For pure rendering workloads (e.g., synthetic images without personal data), compliance is simpler, though clients may still demand EU/UK-only data residency.

HIPAA, PCI DSS, BaFin Guidance, Open Banking/PSD2

Beyond generic privacy law, sector regulations matter

US Healthcare (HIPAA)

Any processing of PHI must comply with HIPAA’s Privacy and Security Rules, typically requiring BAAs, strict access controls, encryption, and audit trails.

Payments (PCI DSS, PSD2/Open Banking)

Card data and payment operations must comply with PCI DSS and PSD2/Open Banking rules around strong customer authentication and secure processing.

Germany Financial Services (BaFin)

Cloud outsourcing guidance expects transparent contracts, audit rights, security, and data location controls for financial institutions using cloud services.

If you’re building fraud models for a London fintech or risk models for a Frankfurt bank, you’ll generally:

Keep live cardholder or account data in a cloud/data center with PCI DSS and BaFin-aligned contracts.

Push only tokenized, pseudonymized, or synthetic datasets to decentralized GPU networks.

How Decentralized GPU Clouds Handle Jurisdictions, SOC 2, and ISO 27001

Mature decentralized GPU providers now increasingly offer.

Region filters (EU-only, US-only, etc.).

“Enterprise” or “verified” node tiers with audited data centers (SOC 2, ISO 27001).

Documentation mapping controls against frameworks like ISO 27001, SOC 2 and sometimes industry-specific security expectations.

Your due diligence should include

DPAs and data processing terms

Evidence of SOC 2 / ISO 27001 for underlying data centers

Proof that jurisdictional filters are enforced technically (not just in marketing)

Mak It Solutions often helps clients document these controls for internal risk, legal, and procurement teams, especially in Germany, the UK, and EU institutions.

GEO-Based Deployment Strategies: US, UK, Germany & Wider Europe

In practice, most teams mix centralized and decentralized GPU clouds co-locating GPU nodes or choosing networks with strong footprints in Frankfurt, Amsterdam, London, Dublin, or US hubs to balance latency, compliance, and cost.

Let’s look at concrete scenarios.

US Scenarios: AI Startups in San Francisco, New York, Austin

Typical US patterns.

San Francisco / Bay Area

AI startups keep core infrastructure on GCP or AWS us-west while bursting training jobs to decentralized GPUs in US-west and Canada to avoid local shortages.

New York

Fintechs and adtechs use us-east for low latency but experiment with decentralized GPU clouds for backtesting, model experimentation, and synthetic-data workloads.

Austin

Mix of gaming, creative studios, and AI product teams leveraging lower energy costs and strong connectivity to operate their own DePIN nodes, offsetting internal GPU costs.

For many, the winning pattern is hybrid: centralized cloud for stateful services and regulated data, decentralized GPU cloud for stateless, bursty compute.

UK & London Hubs: Fintech, Gaming, and NHS-Adjacent AI Use Cases

In the UK, London and Manchester are hubs for

Fintech (Open Banking, PSD2 APIs)

Gaming & creative studios.

NHS-related AI for imaging, triage, and operations.

Deployment patterns.

Production systems and live personal data often remain in UK data centers (Azure UK South/West, AWS London, etc.).

Decentralized GPU clouds are used for:

Non-PHI experimentation on NHS-style datasets that have been anonymized.

Large-scale inference for gaming and advertising, where jurisdiction and latency are easier to control.

Frankfurt, Amsterdam, Dublin, Zurich, Paris for Low-Latency, Compliant GPU Access

Within the EU, key hubs for low-latency, compliant GPU access include:

Frankfurt (DE-CIX, financial hub, BaFin-sensitive workloads)

Amsterdam and Dublin (major cloud regions and data center density)

Zurich (for Swiss financial/privacy-sensitive workloads)

Paris (growing AI and HPC hub)

A common blueprint:

Use AWS/GCP/Azure regions in Frankfurt, Amsterdam, Dublin, Paris for your main cluster.

Connect decentralized GPU clouds with EU-only node filters to run big training jobs or rendering workloads.

Keep identity, secrets, and primary databases in your main cloud, while GPUs act like “remote workers” attached by secure networking.

This approach gives you EU data residency, GDPR alignment, and competitive GPU pricing, especially if you can negotiate enterprise plans with decentralized providers.

How to Evaluate and Deploy on a Decentralized GPU Cloud

A practical adoption path starts with a small POC such as moving a single inference service or rendering job to a decentralized GPU cloud then benchmarking cost, latency, and reliability against AWS or GCP before scaling more workloads.

Think of this as cloud vendor evaluation + tokenomics due diligence.

Pricing, Performance, Compliance, Support & Tokenomics

When shortlisting vendors, evaluate

Pricing & Economics

GPU SKUs and hourly rates vs AWS/GCP/Azure in your regions.

Any lock-ins (minimum commitments, special tokens, withdrawal limits).

Performance

Supported frameworks (PyTorch, TensorFlow, Hugging Face, ONNX).

Benchmarks on your real models, not just synthetic tests.

Compliance & Security

Data residency controls (EU-only, UK-only, US-only).

Underlying data centers’ certifications (SOC 2, ISO 27001).

Any sector-specific support (HIPAA, PCI DSS, BaFin-aligned setups).

Support & Ecosystem

Documentation and SDK quality.

Enterprise support, SLAs, escalation paths.

Tokenomics & MiCA Impact

If rewards or payments involve tokens, how are they treated under MiCA and local tax rules in EU jurisdictions?

This checklist helps avoid surprises where a “cheap GPU” later causes a compliance or accounting headache.

Deploying a PyTorch or TensorFlow Model on a Decentralized GPU Network

Here’s a high-level, realistic step-by-step guide you can adapt for most platforms:

Pick a Network & Region

Choose a provider with GPUs near your users (e.g., Frankfurt for EU, London for UK, Virginia for US-East).

Enable any jurisdiction filters (EU-only, UK-only, etc.).

Containerize Your Model

Package your PyTorch or TensorFlow model into a Docker image with all dependencies.

Expose a simple HTTP/GRPC endpoint (e.g., FastAPI, Flask) for inference.

Push Image to Registry

Use the provider’s registry or a private registry (AWS ECR, GCP Artifact Registry, etc.).

Ensure the decentralized nodes can authenticate to pull the image.

Define Job Specs / Deployment Config

Specify GPU type (A100/H100/4090), VRAM, CPU/RAM, and max replicas.

Add environment variables (model path, API keys, feature flags).

Submit Deployment

Use CLI, SDK, or web dashboard to create the deployment.

The network’s scheduler matches your job to suitable GPU nodes.

Configure Networking & Security

Restrict ingress (IP allowlists, mTLS, or API gateways).

If needed, set up a VPN or private tunnel from your main cloud/VPC.

Test & Benchmark

Run load tests from US, UK, and EU locations to gauge latency and throughput.

Compare against your existing AWS/GCP/Azure baseline on cost per 1,000 requests.

Monitor & Iterate

Stream logs and metrics into your observability stack.

Tweak GPU types, regions, and replica counts based on performance.

This sequence can be executed in days, not months, and gives you a data-driven view of whether decentralized GPUs are truly cheaper and reliable for your workload.

Running a Pilot: POCs, Benchmarks, and Cost Comparisons vs AWS/GCP/Azure

To make the POC credible internally.

Define a single service (e.g., one LLM endpoint or a specific Blender render job).

Capture metrics

Latency percentiles (p50, p95) by region.

Error rates and retries.

Total GPU hours, storage, and egress.

Then

Run the same service on your current cloud as a baseline.

Compare cost per 1,000 inferences or cost per rendered frame.

Present results to engineering, finance, and compliance stakeholders.

Mak It Solutions can help design and execute this POC, including performance testing, analytics dashboards, and integration into your existing BI/Analytics stack.

Where Decentralized GPU Clouds Fit in Your 2025–2027 Stack

As AI infrastructure spending accelerates IDC projects AI infrastructure spending reaching hundreds of billions by 2029, mostly driven by accelerated servers decentralized GPU clouds are likely to play a supporting but important role.

Hybrid & Multi-Cloud Architectures with Decentralized GPUs as a Cost Valve

A realistic 2025–2027 architecture.

Primary clouds (AWS, GCP, Azure) host your core apps, data, and managed services.

Decentralized GPU clouds act as.

A cost valve for training and inference.

A burst capacity source during product launches or seasonal peaks.

A global render pipeline for creative workloads.

This hybrid approach gives CIOs and CTOs the flexibility to ride AI demand without being locked into a single pricing model or capacity constraint.

Token Incentives, Sustainability, and Hardware Cycles

Token incentives will keep evolving, especially under MiCA and similar regulations. Over time, the market is likely to reward.

Efficient, energy-aware nodes (sustainability premiums).

Transparent, verified performance and compliance.

Hardware cycles where older GPUs move into DePIN networks as newer models roll out in hyperscalers.

Decentralized networks might become the secondary market for GPUs extending the useful life of hardware and helping offset environmental impact.

When to Move from Experiment to Production in US/UK/Germany/EU

A sensible roadmap

2025

Run POCs in US, UK, and EU regions on low-risk workloads.

2026

Move non-critical production inference and rendering into decentralized GPU clouds where benchmarks prove value.

2027

Integrate decentralized GPUs as a standard part of your multi-cloud policy, with clear governance, vendor management, and FinOps reporting.

For many teams, the tipping point is when cost and capacity benefits consistently outweigh the additional operational and compliance overhead and that moment is arriving faster than most roadmaps anticipated.

FAQs

Q : Are decentralized GPU clouds reliable enough for mission-critical enterprise workloads?

A : Decentralized GPU clouds can be reliable for many AI and rendering workloads, but they typically don’t match the end-to-end enterprise guarantees of AWS, GCP, or Azure. Reliability comes from protocol-level redundancy, reputation systems, and sometimes staking/slashing models rather than classic cloud SLAs. For mission-critical workloads in banking, healthcare, or public sector, most organizations keep systems of record and regulated data on traditional clouds or on-prem while using decentralized GPUs for bursty training, large-scale experimentation, or rendering. Over time, as more nodes operate out of SOC 2/ISO 27001 data centers and enterprise SLAs mature, we’ll see a gradual shift toward higher-risk workloads.

Q : How do decentralized GPU networks verify that nodes actually run my AI or rendering jobs correctly?

A : Decentralized GPU networks typically combine cryptographic and statistical verification with reputation and economic incentives. For example, they may split a job into chunks, send them to multiple nodes, and compare outputs; if results diverge, dishonest nodes can be penalized or slashed. Some networks use checkpointing and random spot-checks on intermediate outputs, while others design proof-of-compute schemes that cryptographically attest to execution. On top of that, nodes accumulate reputation scores based on historical success and latency, and customers can choose to route workloads only to higher-tier or audited nodes. For very sensitive use cases, many enterprises still add their own validation layer re-checking outputs or running canary jobs to detect misbehavior.

Q : Can I keep all personal data inside the EU or UK while using a decentralized GPU cloud?

A : Yes in principle if your chosen provider offers region and jurisdiction filters and backs them with enforceable contracts. Many decentralized GPU platforms now allow you to restrict workloads to EU-only or UK-only nodes, which is important for GDPR/DSGVO and UK-GDPR compliance. However, you must verify that: (1) nodes are physically located in the stated jurisdictions, (2) underlying data centers have appropriate certifications (e.g., ISO 27001, SOC 2), and (3) contracts include appropriate data processing and audit rights. For highly regulated workloads, a common pattern is to keep live personal data in a traditional EU or UK cloud while sending only pseudonymized or synthetic data to decentralized GPUs for training and evaluation.

Q : What’s the difference between a DePIN GPU network and traditional crypto mining or staking?

A : Traditional crypto mining or staking usually secures a blockchain (e.g., proof-of-work or proof-of-stake) and earns rewards for contributing hashpower or capital, regardless of whether any useful work is done beyond consensus. A DePIN GPU network, by contrast, pays providers for performing useful external work such as AI training, inference, or 3D rendering. The GPU cycles you contribute are directly tied to workloads from real users, often channeled through a marketplace. Tokens or reward mechanisms still exist, but they’re linked to actual compute jobs, not just block validation. From a business standpoint, DePIN feels less like mining and more like joining a decentralized cloud provider, with corresponding obligations for uptime, security, and sometimes regulatory compliance.

Q : How do token incentives and GPU rewards affect the real cost of using a decentralized GPU cloud over time?

A : Token incentives can lower effective costs in the short term if networks subsidize early adopters with extra rewards or discounts, but they also introduce volatility. When token prices are high, GPU providers may demand more tokens (raising USD-equivalent prices); when prices fall, networks might adjust emissions or pricing tiers. For buyers, the safest strategy is to model cost in fiat terms (USD, GBP, EUR) and treat any token rebates as upside, not as a core assumption in your business case. Over multi-year horizons especially in the EU under MiCA you’ll also need to consider accounting and tax treatment for any tokens earned or held. This is why many enterprises prefer platforms that expose stable, fiat-denominated pricing, even if settlement happens on-chain behind the scenes.

Key Takeaways

Decentralized GPU clouds aggregate GPUs from many providers into a marketplace, offering potentially lower prices and better capacity than a single hyperscaler—especially for LLM training, inference, and rendering.

The strongest fit is for bursty, large-scale, or non-critical workloads, where you can tolerate some extra integration complexity in exchange for significant cost savings.

Compliance and data residency remain crucial: for GDPR/DSGVO, UK-GDPR, HIPAA, PCI DSS, and BaFin-sensitive workloads, you must enforce region filters, use audited data centers, and keep highly regulated data in more traditional environments.

A hybrid strategy centralized cloud for stateful services and sensitive data, decentralized GPUs as a cost valve and burst layer is likely to dominate from 2025–2027.

Success depends on rigorous evaluation and POCs: benchmark pricing, performance, reliability, and support across US, UK, and EU regions before committing to any provider or token ecosystem.

If you’re evaluating a decentralized GPU cloud vs AWS, GCP, or Azure, you don’t have to do it alone. Mak It Solutions can help you design the architecture, run real-world POCs, and build dashboards that tie GPU spend directly to AI outcomes.

Whether you’re an AI startup in San Francisco, a fintech in London, or a data-driven enterprise in Frankfurt, we can co-create a hybrid GPU strategy that balances cost, compliance, and performance. ( Click Here’s)